Transforming Data Workflows: dbt implementation at GetYourGuide

Explore how GetYourGuide transformed their data workflows by integrating dbt with Databricks, Airflow, and Monte Carlo. Learn about migrating from custom Spark jobs, optimizing CI/CD, automating documentation with AI, and orchestrating dbt models using Astronomer Cosmos.

Key takeaways:

In the second part of this blog series, Giovanni Corsetti, a data Engineer at Core Data Platform, explores the integration of dbt, airflow, databricks and Monte Carlo. Learn about migrating from custom Databricks jobs, optimizing CI/CD, automating documentation with AI, and orchestrating dbt models using Astronomer Cosmos.

Introduction

Previously, GetYourGuide relied on Rivulus, a custom-built data transformation tool for Spark SQL transformations running within Databricks job clusters. This in-house tool came with its own set of headaches: heavy maintenance demands, a steep learning curve for new team members, and tricky debugging due to its tight coupling with Apache Airflow. To tackle these issues head-on, GetYourGuide decided it was time to switch to dbt, the industry-leading tool for data analysis and business intelligence.

Initially, the migration plan involved integrating dbt to the existing Rivulus job-triggering setup within Databricks job clusters. However, this approach quickly proved problematic, as detailed in part one, “dbt & Databricks: Using job clusters for data pipelines”. The friction caused by fitting the new dbt framework into legacy Rivulus constraints was counterproductive, prompting a strategic reevaluation.

Ultimately, GetYourGuide adopted a more efficient solution: integrating dbt with the Databricks serverless platform. This allowed the team to capitalize on the straightforward, open-source dbt-databricks connector, saving valuable time, reducing complexity, and paving the way for smoother operations.

To implement dbt at GetYourGuide, we needed the following elements:

- A local development with clear access control policies in place

- A seamless integration with Databricks

- A production setup that could be fully integrated with Airflow, with visual features that allowed us to rerun failed production models without much of an overhead

A local development with dbt

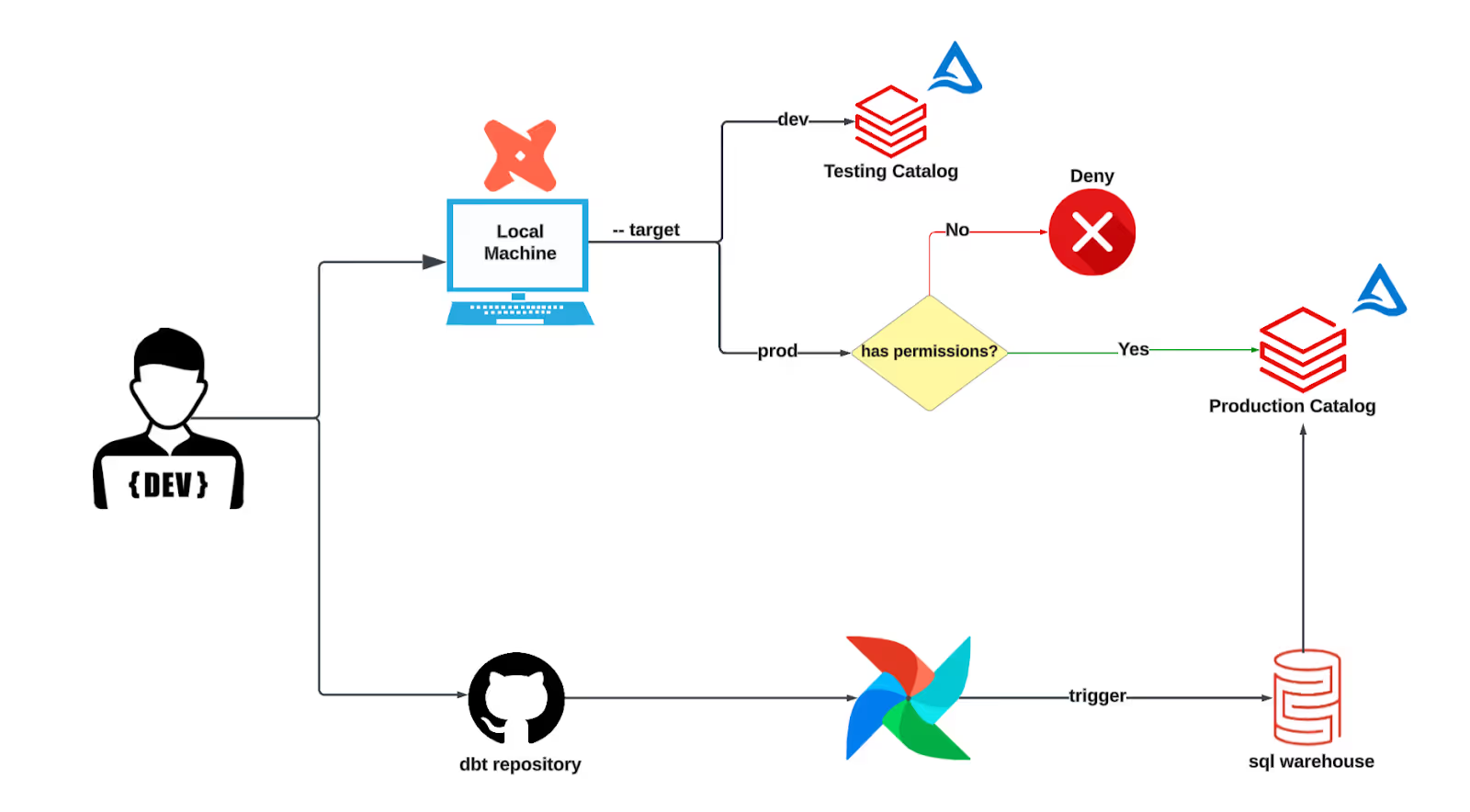

Implementing dbt should empower developers by giving them complete control over their data transformation models through simple CLI commands while maintaining strong data access control to prevent unauthorized schema or data changes. Additionally, when transformations run in a testing environment, automatic data sampling mechanisms ensure minimal impact on the data warehouse.

To streamline management, we established two Databricks catalogs: one for production and another for testing, named production and testing catalogs, respectively. To further enhance this structure, we have customized the default location_root macro. This directs tables created in the production catalog to a dedicated domain-specific S3 bucket, while tables in the testing catalog are stored in a general testing bucket. This clear separation simplifies access control significantly.

Fortunately, the dbt-databricks adapter allows users to define connections in the profiles.yml file, specifying the desired catalog directly. By setting the testing catalog as the target output within profiles.yml, users can safely experiment in a sandbox environment where tables are always created using sampled data. To achieve this, we have replaced some default macros:

- ref() → sampled_ref()

- source() → sampled_source()

As their names suggest, these macros add sampling logic for non-production targets, reducing overhead from full-table scans in Databricks. For flexibility, we have introduced a variable to disable sampling when necessary. We have also trained our analysts on leveraging the dbt --defer flag, enabling them to reference full production table paths when required.

On the Databricks side, permissions are easily managed based on the personal access token (PAT) specified in each user’s profiles.yml. This allows the data core platform team to enforce access control policies directly according to who submits the query. This setup has significantly improved development speed and simplified critical processes, including backfilling tables and debugging pipeline issues.

dbt within databricks

UDFS

Previously at GetYourGuide, we handled SQL transformations through job clusters triggered by Rivulus. Each job required explicitly defined cluster specifications to optimize resource utilization. While this approach sounded efficient on paper, it quickly became cumbersome in practice. Every new transformation introduced another YAML file to manage, prompting teams to bundle transformations into fewer, larger jobs to avoid the hassle. This not only complicated our workflows but also made tasks unnecessarily bulky and difficult to maintain.

Moreover, maintaining these job clusters was a demanding task. As datasets grew - and they certainly did - engineers frequently had to manually resize clusters to match increased demands. Inevitably, users would often overestimate their cluster sizes, resulting in oversized clusters and inflated costs. This manual tweaking was not only time-consuming but also error-prone, leading to suboptimal resource usage and higher operational expenses.

When GetYourGuide transitioned to dbt, we replaced legacy job clusters with Databricks SQL warehouses due to dbt-databrick compatibility. According to Databricks documentation, SQL warehouses are specifically optimized for SQL analytics and interactive queries, dynamically adjusting compute resources in real-time to handle varying workloads efficiently. To our pleasant surprise, this shift resulted in approximately 20% cost savings and a remarkable 60% reduction in execution times for our transformations. These improvements stemmed from SQL warehouses’ ability to automatically scale resources according to query complexity and concurrency, significantly enhancing both performance and cost efficiency.

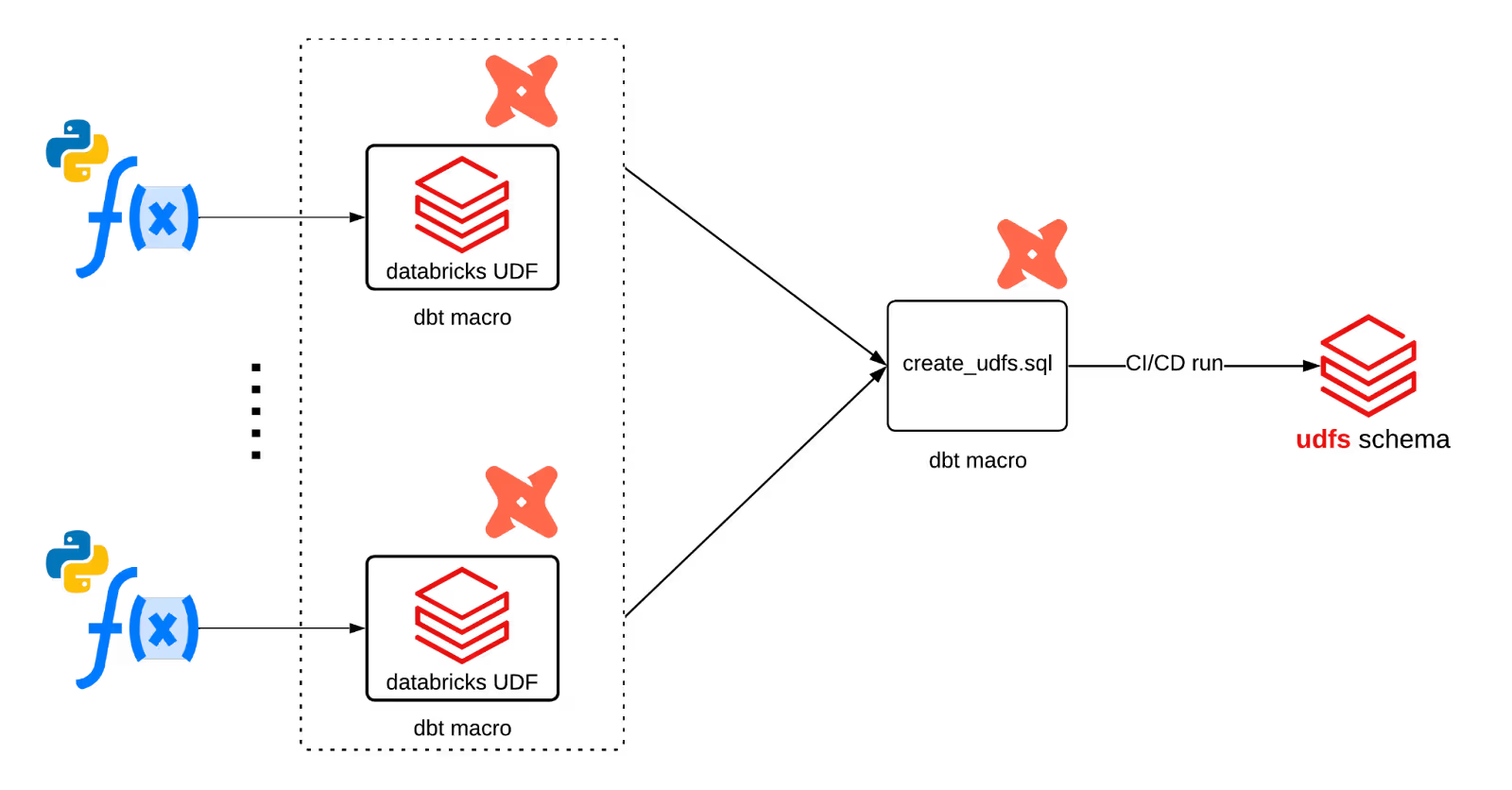

However, the migration wasn’t without hurdles. One significant challenge was the handling of User Defined Functions (UDFs). Our previous setup relied heavily on Scala UDFs, which, unfortunately, are not supported by SQL warehouses at the time of writing. This forced us into a comprehensive refactoring effort, converting Scala UDFs into Python-based implementations compatible with SQL warehouses. To systematically address this, we adopted a structured approach: each UDF was encapsulated into its own dbt macro, leveraging Databricks' CREATE OR REPLACE FUNCTION syntax. A master macro, create_udfs.sql, was then developed to iterate over these individual macros, executing each through dbt's run_query command for modular deployment. This innovative method allowed us to maintain clear, version-controlled definitions of our UDFs directly within our codebase, ensuring consistent deployment and easy management.

Additionally, integrating this process into our Continuous Integration and Continuous Deployment (CI/CD) pipeline significantly streamlined our workflow. Now, any changes to UDFs automatically trigger their recreation in the default branch deployment, ensuring consistency and reliability. To further bolster our confidence in these transformations, we implemented comprehensive unit tests for every single UDF, effectively safeguarding against potential regressions or unintended side effects.

Documenting tables with AI

At GetYourGuide, we recognized the immense value of having comprehensive documentation for our dbt models early on. Thanks to dbt’s powerful "documentation-as-code" feature and its seamless integration with the Databricks UI, documenting our models became not just desirable, but essential. However, the sheer scale of our dbt data ecosystem — over 1,000 models, each requiring detailed column-level descriptions — posed a daunting challenge. Manually documenting each column across hundreds of models was simply not feasible.

Fortunately, Databricks recently introduced the ai_query command, currently available in public preview. This innovative feature leverages AI to automatically generate accurate and meaningful documentation, significantly reducing the manual effort required. At GetYourGuide, we quickly adopted this functionality, initially experimenting by feeding our internal documentation directly into ai_query. While this produced some promising results, we knew there was room for improvement.

Through further experimentation, we discovered that the accuracy and clarity of our AI-generated documentation dramatically improved when we provided richer context to the ai_query command. Specifically, we enriched our inputs by combining the original dbt SQL file and the corresponding schema YAML file alongside our internal documentation. This multi-layered approach allowed the AI to better understand the structure, relationships, and intent behind each column, resulting in much more precise and context-aware column descriptions.

The process, illustrated clearly in the diagram above, involves taking these three sources: the schema YAML file, the dbt SQL file, and our internal documentation, and feeding them into the ai_query command. The AI then synthesizes this information to produce a fully populated schema YAML file, complete with detailed, accurate, and insightful column-level documentation for each dbt model. This approach not only saves significant time but also ensures consistency and quality across all of our documentation.

By harnessing Databricks’ AI capabilities, we transformed a previously overwhelming documentation task into a streamlined, automated, and highly effective process. This advancement empowers our teams to maintain high-quality documentation effortlessly, enhancing transparency, collaboration, and efficiency across the entire data organization.

dbt and airflow

At GetYourGuide, integrating Apache Airflow into our data stack was a strategic move aimed at enhancing observability and control of our dbt models. To achieve a seamless integration, we chose the Astronomer Cosmos library, a powerful tool specifically designed to render dbt models as Airflow DAGs for orchestration and scheduling. This allowed us not only to visualize our dbt workflows directly in Airflow’s intuitive UI but also provided a convenient way to visually retrigger tasks when needed.

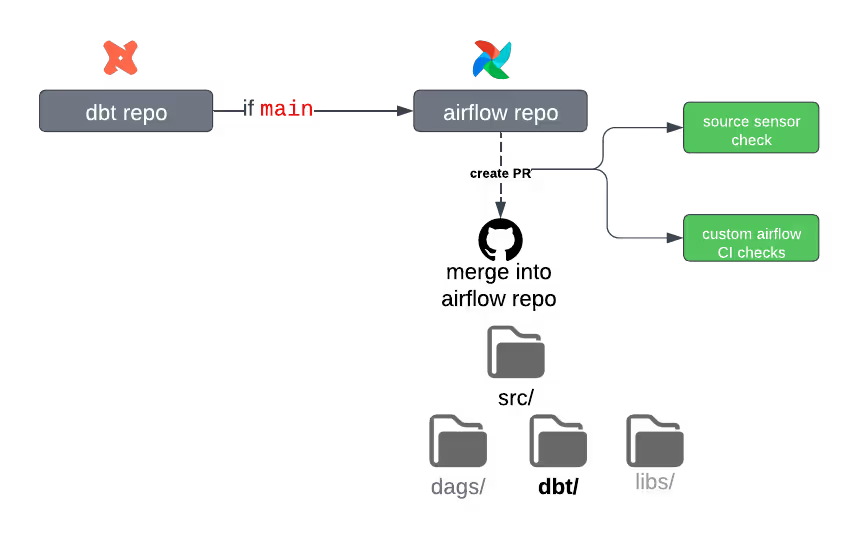

However, we quickly encountered a best-practice challenge. Since Airflow and dbt are fundamentally different technologies serving distinct purposes, combining their respective files in a single repository would complicate maintenance and violate the separation of concerns. Therefore, we opted for a cleaner solution: maintaining separate repositories for dbt and Airflow, connected through an automated CI/CD workflow that syncs dbt changes into the Airflow repository. As illustrated in the diagram above, whenever changes are merged into the main branch of our dbt repository, an automatic pull request is triggered to merge these changes into the Airflow repository. This PR then undergoes rigorous CI/CD checks, including both generic validations and custom Airflow-specific tests, ensuring nothing breaks in production.

Though this approach significantly improved the overall organization and clarity of our project, it introduced a practical bottleneck: the time-consuming CI/CD cycles. Each dbt change needed to pass through two separate CI/CD pipelines — first for dbt and then again for Airflow — causing delays in merging and deployment. To address this, we explored solutions to optimize the CI/CD process.

CI/CD optimisation

One highly effective improvement was adopting Astral UV, an innovative Python installation and package management tool built in Rust. Astral UV dramatically sped up our Python-related GitHub Actions by approximately 73%, significantly reducing the time required to install dependencies and manage packages. Since our pipelines heavily rely on Python installations, particularly for dbt-core and dbt-databricks packages, this optimization significantly improved overall CI/CD efficiency.

Additionally, we identified another valuable optimization: reusing dbt artifacts generated from previous CI/CD runs. By leveraging dbt’s built-in partial parsing feature - activated by default - we stored these artifacts in AWS S3 and reused them in subsequent pipeline executions. This strategy resulted in an impressive 41% performance gain during dbt compilation steps. Consequently, this optimization not only accelerated compilation but also streamlined all subsequent dbt-related tasks, including testing transformations, generating static documentation, and seeding operations.

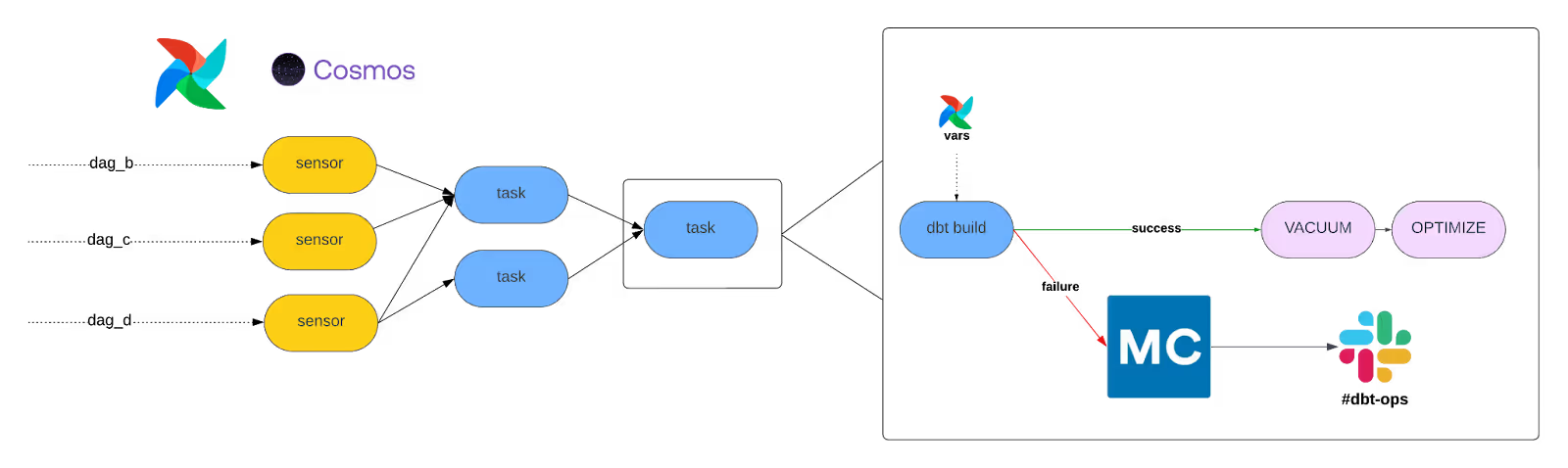

Cosmos and dbt together

Integrating Astronomer Cosmos with our dbt setup significantly enhanced our data workflows by providing a visual, user-friendly interface for managing and orchestrating dbt models within Apache Airflow. Cosmos allowed us to effortlessly convert complex dbt projects into intuitive Airflow DAGs, drastically reducing the complexity of managing dependencies and tasks. By leveraging Cosmos, we gained comprehensive visibility into our dbt tasks directly within Airflow's UI, enabling easier monitoring, troubleshooting, and management of data pipelines. This effectively gave us a "UI for dbt", combining the powerful SQL transformation capabilities of dbt with Airflow’s robust scheduling, observability, and orchestration features.

Furthermore, Cosmos allowed us to seamlessly integrate native Airflow functionalities such as task retries, alerts, and data lineage tracking into our dbt workflows. We could run dbt models directly against Airflow connections, eliminating the need for separate dbt profiles. This integration significantly streamlined our data operations, enabling us to utilize dbt's vanilla products alongside Airflow's extensive add-ons, ultimately improving operational efficiency and reducing the overhead associated with managing separate tools and workflows.

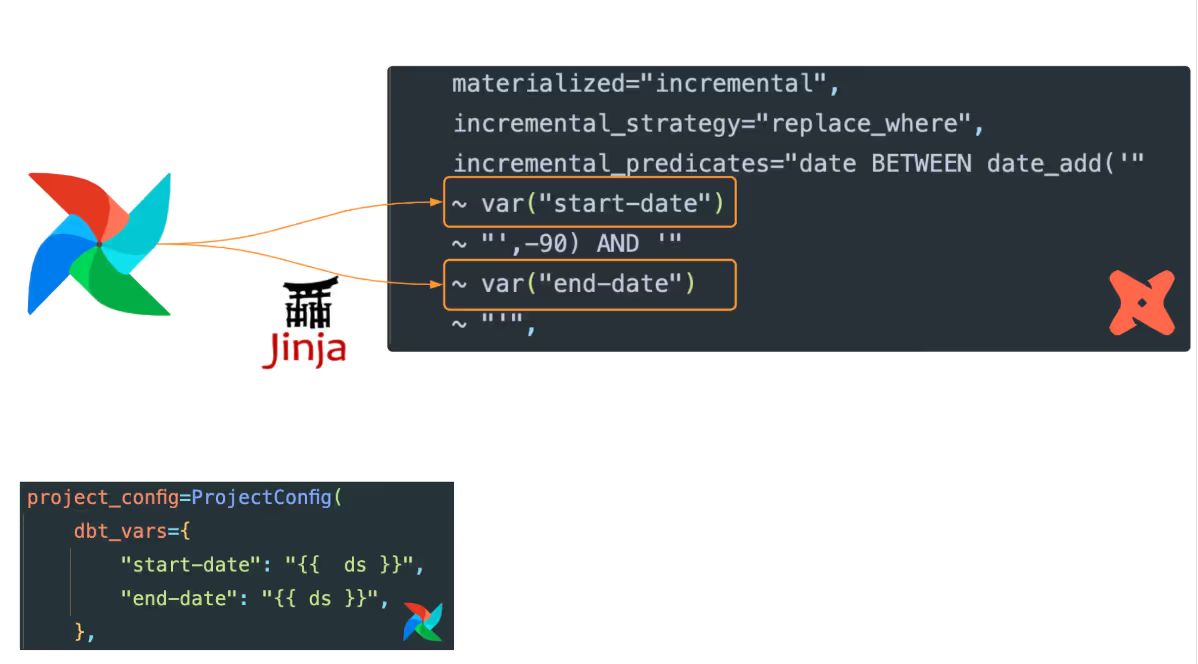

One quick win involved leveraging Airflow Jinja templating with Astronomer Cosmos, which significantly simplified our handling of dbt incremental models. As depicted in the image, we dynamically replaced dbt model variables with Airflow’s Jinja template variables representing the execution date. This allowed us to efficiently schedule and execute incremental dbt runs directly through Airflow. By integrating Jinja templating, we could easily define dynamic date ranges for incremental predicates, making our workflows more flexible and adaptable to changing data conditions. This integration provided multiple advantages, notably simplifying the orchestration and improving the maintainability of our incremental data pipelines. By using Jinja templates, we avoided hardcoding dates or manually updating variables, thus reducing errors and operational overhead.

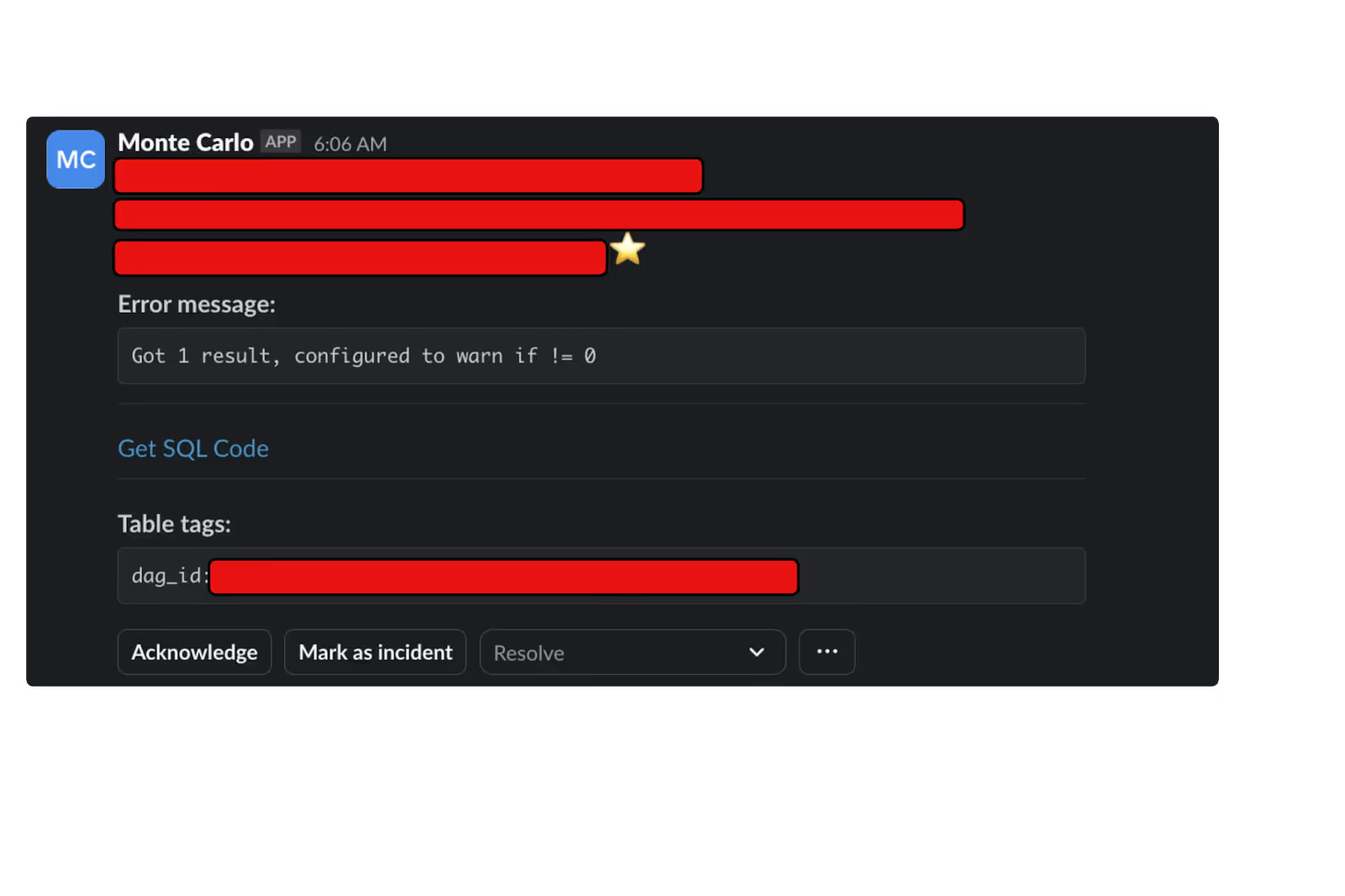

In addition, integrating Monte Carlo into our dbt and Airflow pipeline has significantly enhanced our data observability and reliability. After executing our dbt jobs through Airflow, we utilize the generated run_results.json file to feed critical information directly to Monte Carlo. This integration allows us to automatically flag dbt test failures and model errors as Monte Carlo incidents, streamlining our troubleshooting processes and enabling faster resolution of data issues. Moreover, this setup extends our observability capabilities by integrating seamlessly with Slack, ensuring real-time notifications. Whenever Monte Carlo detects anomalies or errors based on dbt run results, it immediately sends alerts directly to our Slack channels.

Final Remarks

The adoption of dbt at GetYourGuide has fundamentally transformed our data operations, creating a robust, scalable, and maintainable data ecosystem. By integrating dbt seamlessly with Databricks serverless, leveraging Astronomer Cosmos for intuitive Airflow orchestration, and employing Monte Carlo for comprehensive data observability, we have successfully streamlined our workflows, enhanced productivity, and significantly improved our data reliability. These strategic choices not only simplified our technical stack but also empowered our teams to innovate faster and with greater confidence. Moving forward, we aim to further enhance the current setup by leveraging existing integrations and automating some processes.

Interested in redefining the travel experience sector alongside some of the top tech talent in the world? Check out our open roles and learn more about our team here.

.JPG)

.JPG)