From Zero to Hero: GetYourGuide’s intelligent approach to new activities

Discover how GetYourGuide tackles the “cold start” problem in marketplaces with a Bayesian approach. Learn how intelligent modeling helps balance risk, personalize recommendations, and unlock the potential of new travel activities worldwide.

Key takeaways:

Matching supply and demand is the lifeblood of every marketplace. At GetYourGuide, this challenge is particularly fascinating - the goal is more than just connecting buyers and sellers, we’re helping travelers discover experiences that will become lifelong memories.

With 35,000 suppliers offering over 150,000 activities across 12,000 cities worldwide, we’ve built significant scale. But more importantly, we’ve built a curated marketplace focused on quality, not just quantity.

What makes this especially challenging is the unique nature of experiences. They are deeply personal, harder to compare, and often exist in a market that’s still largely offline. How do you evaluate a new food tour in Bangkok against a cooking class in the same city when they’ve just been added to the platform?

This is where innovative technical approaches become crucial. Modeling helps answer a key question: how much information is enough to make smart decisions about new activities? That’s the focus of this article. By applying Bayesian thinking to this complex problem space, we can better personalize our vast assortment to each traveler’s needs, even when dealing with activities that have little historical data.

The ‘cold start’ problem

The ‘cold start’ problem is a well-known challenge in marketplaces and specifically in recommender systems. Imagine you are responsible for GetYourGuide’s performance in a given region, and a supplier submits a new cooking class in a city in which you already have plenty of cooking classes. How would you prioritize which of these are shown to users searching for ‘cooking classes in [city name]’ on our platform? Would you give the new cooking class a try, or would you rather stick to what works? Ultimately, it comes down to how much risk you are willing to take.

Taking it to the extreme, if you start only selling the new activity, you may be pushing something that is simply worse than your proven offerings. And if you don’t expose the new activity, you’ll never learn whether customers would have liked it or not.

Not taking a risk is risky, as you may never learn about what’s new and become outdated. Taking too much risk is also risky, as you may ruin the user experience. How can we collect information about the performance of a new product while keeping risk under control?

One thing more important than train_predict() is framing your problem the right way

When we first approached this problem, our instinct was to use ML models to predict if the activity was going to be successful or not, either as a regression (“how many bookings will this activity get in X days?”) or as a classification problem (“will this activity reach N bookings in X days?). These approaches sounded great on paper, but they had a foundational mistake: the problem we have in hand is not to predict if an activity will do well, but rather to manage the risk of giving exposure, and ensuring that we learn from that exposure as intelligently and efficiently as possible. This is a mindset shift that goes beyond data and across how the business understands this problem.

Traditional ML models have their place, but they weren’t ideal for this particular challenge, as:

- They’re better suited for static predictions based on activity characteristics

- They’re not particularly designed for continuously updating beliefs as new data trickles in

- They don’t naturally express uncertainty, which is crucial for risk management

- There is no clear objective function for our use case

This is why we pivoted to a Bayesian approach. Rather than trying to make a single prediction, Bayesian methods:

- Start with a prior distribution based on our existing knowledge

- Update this distribution as new evidence comes in

- Naturally model uncertainty over time, showing how our confidence increases with more data

- Enable stopping the exploration when the improvement plateaus

The key difference is philosophical: ML models try to predict a future outcome, while Bayesian approaches help us understand how much we know (or don’t know) right now, and how that changes with new information.

The ins and outs of Bayesian modeling

Bayesian modeling provides an elegant framework for systematically updating our beliefs as we gather more evidence. The foundation of our approach is the Beta-Binomial model, which is suitable for estimating probabilities of binary outcomes (for example whether a shown activity gets hovered over or clicked or not). The Binomial distribution models the number of successes in a fixed number of independent trials, while the Beta distribution serves as a convenient prior that gets updated as new data arrives.

When applying this to our activities at GetYourGuide, we need to keep a few key points in mind:

Independence assumption: We assume each impression is an independent trial, which works well until an activity gets its first booking and potentially receives reviews. Even if users may view the same activity multiple times and their impressions may not remain fully independent we deem it to be a good-enough approximation. Independence means that one trial doesn’t directly affect another trial’s probability of success.

Constant probability: We assume the success probability remains relatively stable across impressions for a given activity. This assumption can break down when significant changes occur, such as when an activity receives its first reviews or is added to a landing page with a significantly different performance.

Prior knowledge: We can incorporate what we already know about similar activities into our starting point. This is a business call and might include: characteristics of the activity, of the offering, the geography, etc., or simply not using subsplits.

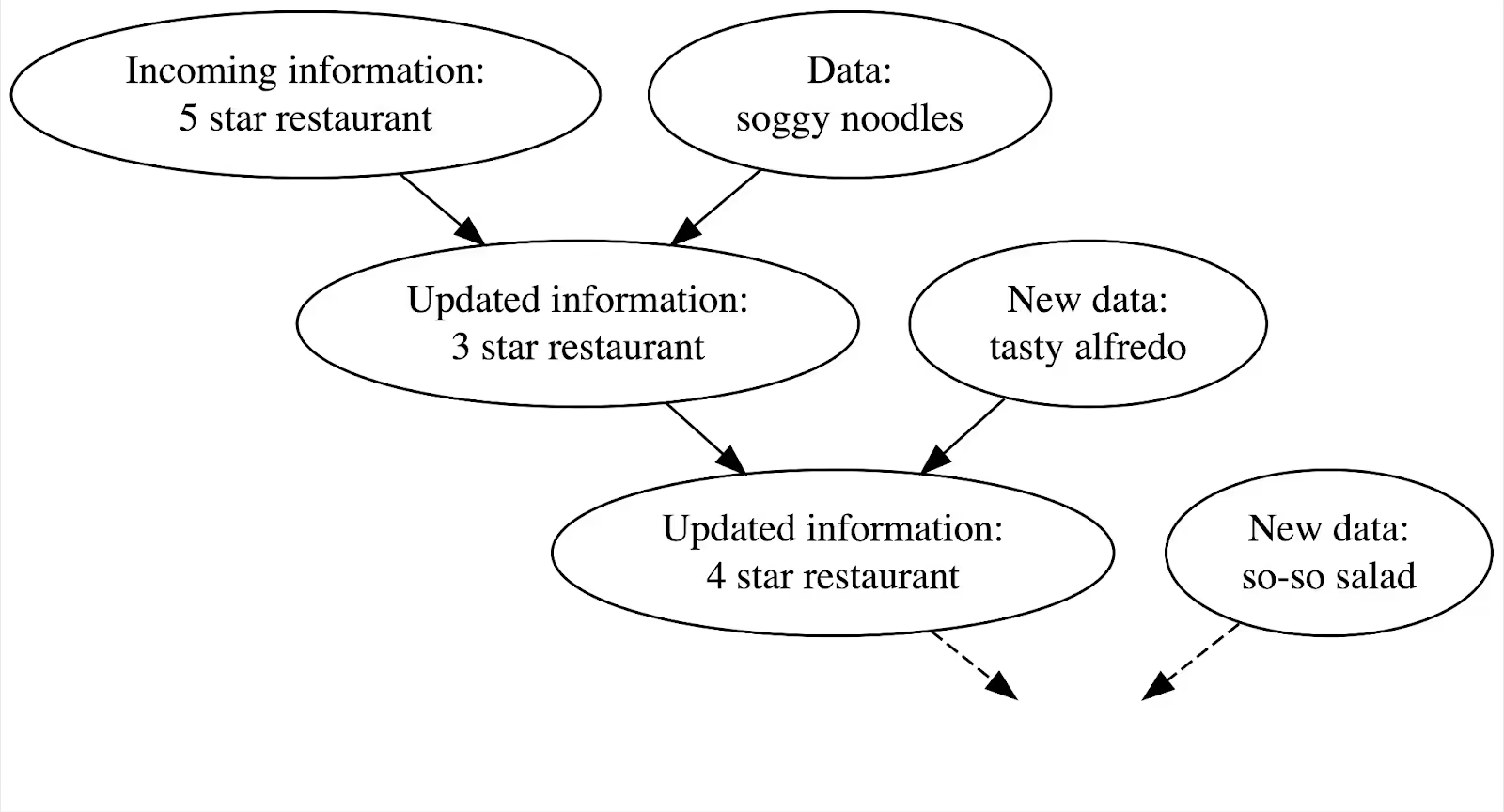

The beauty of this approach is how it naturally represents uncertainty through probability distributions, which narrow as we gather more data. You can think of how each data point contributes to our understanding of the activity’s performance the same way you refine your knowledge about a restaurant when going for dinner. You look it up on Google Maps, look at the reviews, then read one review, read another review, and the more data you have, the more you refine the picture you have of the restaurant in your head.

Chart from https://www.bayesrulesbook.com/chapter-1 illustrating the mechanics of Bayesian thinking. For those interested in the mathematical details behind Bayesian modelling, the Bayes Rules! Chapter 3 provides an excellent reference.

Our application at GetYourGuide

Defining how much relevance we give to past performance and when to stop the Bayesian exploration

Building an effective Bayesian model for marketplace activities requires both technical understanding and business acumen. Two crucial aspects we needed to define were: the weight of the prior and the stopping criteria.

Again, the weight of the prior is primarily a business decision, not just a technical one. We had to consider: Do we want previous data to strongly influence how we evaluate new activities? Are activities in different price brackets or countries similar enough to inform each other? How much inertia do we want to give to activities that behave radically differently in known buckets?

These questions shaped how much weight we gave to our prior distributions. In this application at GetYourGuide, we decided to give extremely light weight to the prior, allowing the model to adapt quickly to activities that are radically different from our existing offerings. If you are dealing with an offering that is more generic, giving more weight to the prior may be your best choice. You can see how much variance you observe in the performance of the offering after having certain exposure and use that criterion to inform your decision.

For stopping criteria, we explored two complementary approaches:

• Using the width of the confidence interval - once we’re confident enough about an activity’s performance (narrow CI), we can make reliable decisions

• Using information theory metrics like Kullback-Leibler divergence to measure how much new information we’re gaining with each batch of impressions.

It is worth noting that in either case, the frequency of the update does influence the stopping criteria - so make sure you factor that in when building yours.

A combination of these two approaches allows us to determine when we’ve learned “enough” about an activity to either promote it further or suggest improvements.

How it works in the real world

In the example above we see an activity’s performance from the day it went online. Each day, we calculated Bayesian confidence intervals to track performance. Two key observations emerged:

- On day 39, the KL divergence criteria stated that the new evidence did not incorporate meaningful enough information. This is caused by data from the previous days not notably affecting the confidence intervals.

- On day 43, the CI was narrower than a pre-defined threshold for the performance metric. In our system, this means we do not expect more value to come from exploring the activity further, as we have an estimation of performance that we consider good enough. All the measurements that happened after day 43 have the green background.

It’s worth noting that any criteria that you set will be subject to thresholds and levels of confidence you ultimately set, and those are incredibly business-dependent. In our case, we rely more on the CI width criteria than on the KL divergence criteria to stop. However, a combination of the two may be useful: if it takes too long to narrow the CIs, and the KL divergence method tells you that you are not getting meaningful information over a certain period of time, it can be a sign to reevaluate whether further investment in the activity is worthwhile.

Implementation note

For our implementation, we used the Beta distribution from SciPy. We took a snippet like the one below, declared it as a User-Defined Functions (UDF) on Spark, and had it run for each row of the pre-processed dataset.

from scipy.stats import beta

# Example: Update a Beta distribution with new data

prior_alpha = 10 # Prior successes + 1

prior_beta = 90 # Prior failures + 1

new_successes = 5 # Successess

new_trials = 100 # Impressions

new_failures = new_trials - new_successes

# Update posterior

posterior_alpha = prior_alpha + new_successes

posterior_beta = prior_beta + new_failures

# Get confidence interval

lower, upper = beta.interval(0.95, posterior_alpha, posterior_beta)

When transitioning from backtesting to production, we learned a valuable lesson: while backtesting requires processing historical data day by day, production systems only need the most current state. Recognizing this distinction halfway through our implementation saved us significant time. Asking yourself early on “What’s the simplest possible production pipeline we could build?” can strip away unnecessary complexity that may have been essential during modeling but becomes redundant in a production environment.

In conclusion, when does this approach add value?

Applying Bayesian decision modelling creates the opportunity to inform your business decisions, helping you translate data into tangible business value. For example:

- Learning that an activity does not perform well quickly and circling this back (e.g., if the activity is not correctly set up, surfacing performance issues to Sales can lead to a rapid identification of the problem and collaboration with the Supplier to fix it).

- Learning that an activity performs well, and informing the next Sales steps.

- Taking more risk and testing out new activities, whilst secure in the knowledge that the risk is capped.

By systematically applying Bayesian thinking to our marketplace, we can allocate impressions more effectively, improve the overall quality of our offering, and set up a dynamic system that continually learns and adapts.

Special thanks to Saulius Lukauskas for the whiteboard sessions shaping this project and to Valentin Mucke for reviewing this article.

.JPG)

.JPG)