Improving Search Results for Ad Traffic with Embeddings

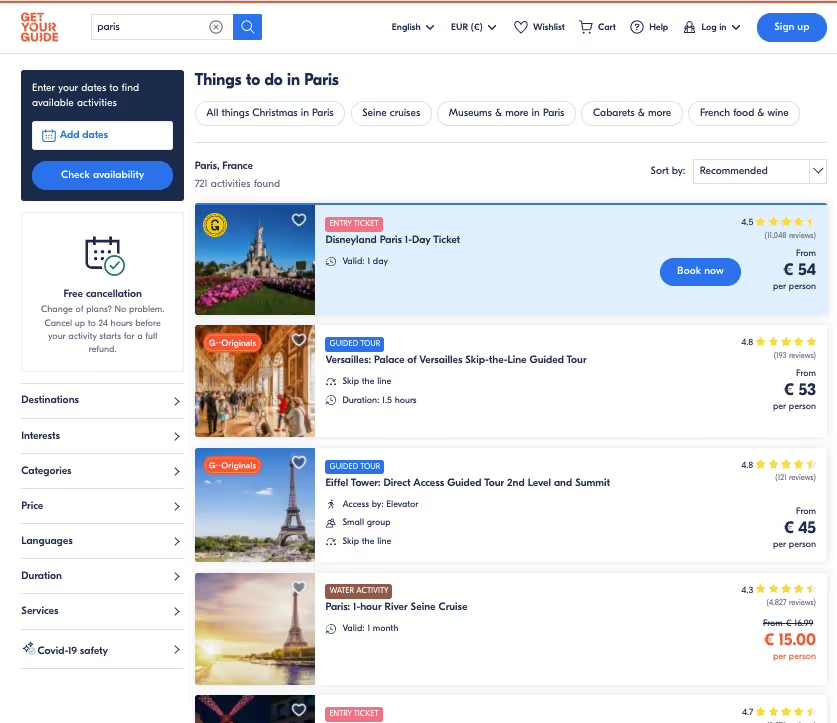

One of the important sources of online traffic for GetYourGuide are ads showing a specific activity offered (through different channels like search engines, social media, external blog posts, etc). The users coming from those ads land on a web page that shows the specific activity on top, called elevated activity, along with other activities in the same city.

222222.avif)

Key takeaways:

Ashraf Aaref is a Senior Software engineer on the Search team, Viktoriia Kucherenko Senior Backend Engineer on the Search team, and Ansgar Grüne is a Senior Data Scientist on the Relevance and Recommendations team at GetYourGuide. They explain how they improved the search results on GetYourGuide landing pages using vectors and semantic similarity.

{{divider}}

The Use Case

One of the important sources of online traffic for GetYourGuide are ads showing a specific activity offered (through different channels like search engines, social media, external blog posts, etc). The users coming from those ads land on a web page that shows the specific activity on top, called elevated activity, along with other activities in the same city.

After spending some time analyzing our data, we believed that there was room for improvement. Given our users had shown some interest in the elevated activity in the first place, very likely the interest isn’t just related to the city, but also to other properties of the elevated activity. Those could be used to improve the relevancy of the results shown on the rest of the webpage.

During one of our monthly hackathons, we decided to build a proof of concept based on this hypothesis. We decided to roll out the solution as an A/B experiment to measure the impact. We tried to bring search results that are similar to the elevated activity to the top of the results page.

Yet a question remained — how would we define similar?

Vectors representing semantic similarity

For detecting the similarity of other activities to the elevated one, we decided as the first step to use data that we already had. We created a vector representation for each activity that we use, for example, to recommend similar activities if the desired activity isn’t available at the moment.

Those vectors have the nice property that they’re close to each other if the two activities are considered close to each other by our users. If the activities are very different, the corresponding vectors point in different directions. Figure 1 shows the idea in two dimensions.

The vectors we use, however, have 300 dimensions.

There are many different ways to calculate such vectors, called embeddings. For example, there are manual creations from existing data, statistical models like Latent Dirichlet Allocation (LDA), or language-based neural network approaches such as BERT. The vectors we use here consider the behavior of our users when deciding which activities are similar.

We built this data pipeline some time ago, as first presented in this meetup, following a pretty ingenious idea mentioned in the paper Real-time Personalization using Embeddings for Search Ranking at Airbnb. We applied the algorithm Word2Vec, that’s intended to calculate vector representations for words by looking at their surroundings in texts. Instead of words, however, we consider the pages of our web portal. Instead of texts, we look at user journeys, i.e. the sequence of pages that a user visits on GetYourGuide.

Such a journey could look like this:

DestinationPage_Berlin, SearchPage_Berlin, ActivityPage_BusTour1Berlin, SearchPage_Berlin, ActivityPage_BusTour2Berlin, ActivityPage_TicketTVTowerBerlin, BookingPage_TicketTVTowerBerlin, …

The Word2Vec algorithm creates similar vectors for words that show up in similar surroundings of text. In our context, it creates similar vectors for their ActivityPages, which show up in similar user journeys.

Mix of performance score and vector similarity

Our search engine is Elasticsearch (AWS Opensearch), where we store/index all activity information that’s relevant for search. Since newer versions of Elasticsearch already support storing vectors and using them in search, we came up with the idea of applying this feature.

We also still want to balance the similarity to the elevated activity with the popularity of activities. We achieved that by applying cosine similarity between the elevated activity’s vector and the ones of other activities. We used a combination of that similarity score (cosine similarity) and activity performance signals to come up with a new score that we use to re-rank our results.

Technical implementation

In our search pipeline, first we do a lookup to Elasticsearch to get all the activities that match the input criteria. An elevated activity should also match the applied filters. The output is the list of activities with their scores. In this experiment we didn’t want to change a lot of the existing pipeline, but rather extend it with additional steps. That’s why we decided to store the vectors in a new activity vector Elasticsearch index.

After getting results from the initial lookup (a classical search), we take the id of the elevated activity and get its vector from the activity vector index. Next, we use this vector to query the activity vector index again by applying k-NN search, and get the vector similarity scores for the output.

As a last step, we mix scores from the normal search (popularity ranking) and similarity search by using the formula:

result_score = popularity_score + w * cosine_similarity_score

To do the vector search query, we use the k-NN plugin in opensearch (AWS Elasticsearch). The plugin enables us to easily search for the nearest neighbors, and get their closeness score to the elevated activity vector. The plugin supports three different methods. They vary in terms of accuracy, scalability, indexing speed, and query speed. We decided to use k-NN with a scoring script that allows us to perform additional filtering.

Here’s an illustration of the whole flow for the blog post example:

.avif)

Results and Learnings

We shipped the improvements described above as an A/B experiment and the results on the business metrics were positive. Users were apparently seeing activities which were better suited to their interest.

Our biggest learning from this success is that one can prove the value of new ideas quite quickly. We implemented everything in two days. This was possible because we mainly used components which already existed, like the Word2Vec vectors and Elasticsearch with its k-NN plugin available out of the box.

And the people working on the project covered all necessary knowledge and were allowed to make the necessary decisions. Because this was done in one of GetYourGuide’s regular hackathons, a more concrete organizational learning is the benefit of such hackathons. They nurture creativity, incentivise cross team cooperation and often result in impactful projects, for our users or for internal improvements.

Next steps

Based on the proof of concept, we’re eager to improve the process of regular recalculation and loading activity vectors in Elasticsearch to ensure that we don’t use stale data. Additionally, we could try out different approaches to building activity vectors.

We also want to try and change the mix between the popularity score of the activity and the closeness to the elevated activity. This means we would alter the weight w in our score formula. It could also be beneficial to vary this weight depending on the considered location, or where the user comes from.

A more general next step is to try other ways of leveraging user interest signals to improve our search results. Such attempts are already in progress. Perhaps there will be another blog post soon!

If you’re interested in joining our Engineering team, check out the open roles.

.JPG)

.JPG)