DDataflow: An Open-Source Tool for End-to-End Tests of Machine Learning Pipelines

Explore how GetYourGuide tackled the slow feedback challenge in machine learning pipelines. This article showcases their journey in developing DDataflow, a cutting-edge tool for end-to-end testing, significantly speeding up iterations and enhancing the efficiency of data product development

Key takeaways:

In this article, Jean Carlo, Data Science Manager on our Growth Data Products and Machine Learning Platform Team talks about DDataflow, a cutting-edge tool for end-to-end testing, that significantly speeds up iterations and enhances the efficiency of data product development.

{{divider}}

The problem

Machine learning pipelines are at the heart of our data products in GetYourGuide. They usually consist of very large datasets being processed in multiple stages. To be effective in continuously experimenting with our data products, we want to iterate as fast as possible on these pipelines, spotting mistakes as early as possible. However, the time between changes and feedback on the impact of the changes can sometimes take hours or even days.

To iterate quickly on these pipelines, we need a fast feedback loop (meaning, you make a change and can observe its effect as fast as possible). A fast feedback loop is quite achievable in software engineering through unit testing in the CI. Nevertheless when transforming up to terabytes of data, it's harder.

We've tried to solve this problem by classical unit-testing and using synthetic data in our data pipelines, but our conclusion after trying it extensively in different teams and contexts is that it is quite hard to do it right in practice.

Here are some of the key reasons:

- To outline every scenario as a unit test you need to write a lot of boilerplate code, which takes considerable time to produce and maintain. And the scenarios are by definition not production, at most a sample of it, so you cant ever be sure you are really designing a realistic case.

- ML is quite experimental in nature, and you want to try many different things (datasets, structures, etc). Often, changes you want to have will entail very large refactorings in the unit tests, many of which you have no idea how they work in big collaborative projects.

- Unit tests cover what you thought in advance can be a problem, but often in the case of a data pipeline, the problem is at the integration of components rather than in their units. Due to the numerical nature of data science, some errors might only appear as distribution changes in our results, which is not so easy to spot.

You have to be quite seasoned in software and data engineering to develop effective data unit tests for machine learning. We strive to hire data scientists with strong mathematical knowledge who own the data products they build. However, engineering skills like writing performant, maintainable tests are not the primary background of most data scientists. Asking them to additionally pick up those skills can be a lengthy process. In a nutshell, the return on investment (ROI) of unit tests for data pipelines is not very good for us.

On the other hand, it's learning from the broader engineering organization in GetYourGuide that end-to-end tests are a very ROI-positive way to catch bugs. So what about end2end tests tool for ML pipelines?

The solution

We've looked for tools in the space of end2end tests for PySpark and could not find anything that suffices our needs. It's still early days in the complementary tooling space for PySpark so we went for building something ourselves.

So how to build end2end tests for ml pipelines? To be end2end we need to basically call our entire pipeline.

We first experimented with our search ranking pipeline, which is responsible for creating the main features for our ranking models. We struggled for a very long time to make changes to this pipeline with confidence. We started creating a different configuration file and triggered the full pipeline on an isolated environment but with production data.

We saw it tremendously improving our speed of delivery. However, the full pipeline in this isolated environment still took over 4 hours to complete. So we needed to be smarter.

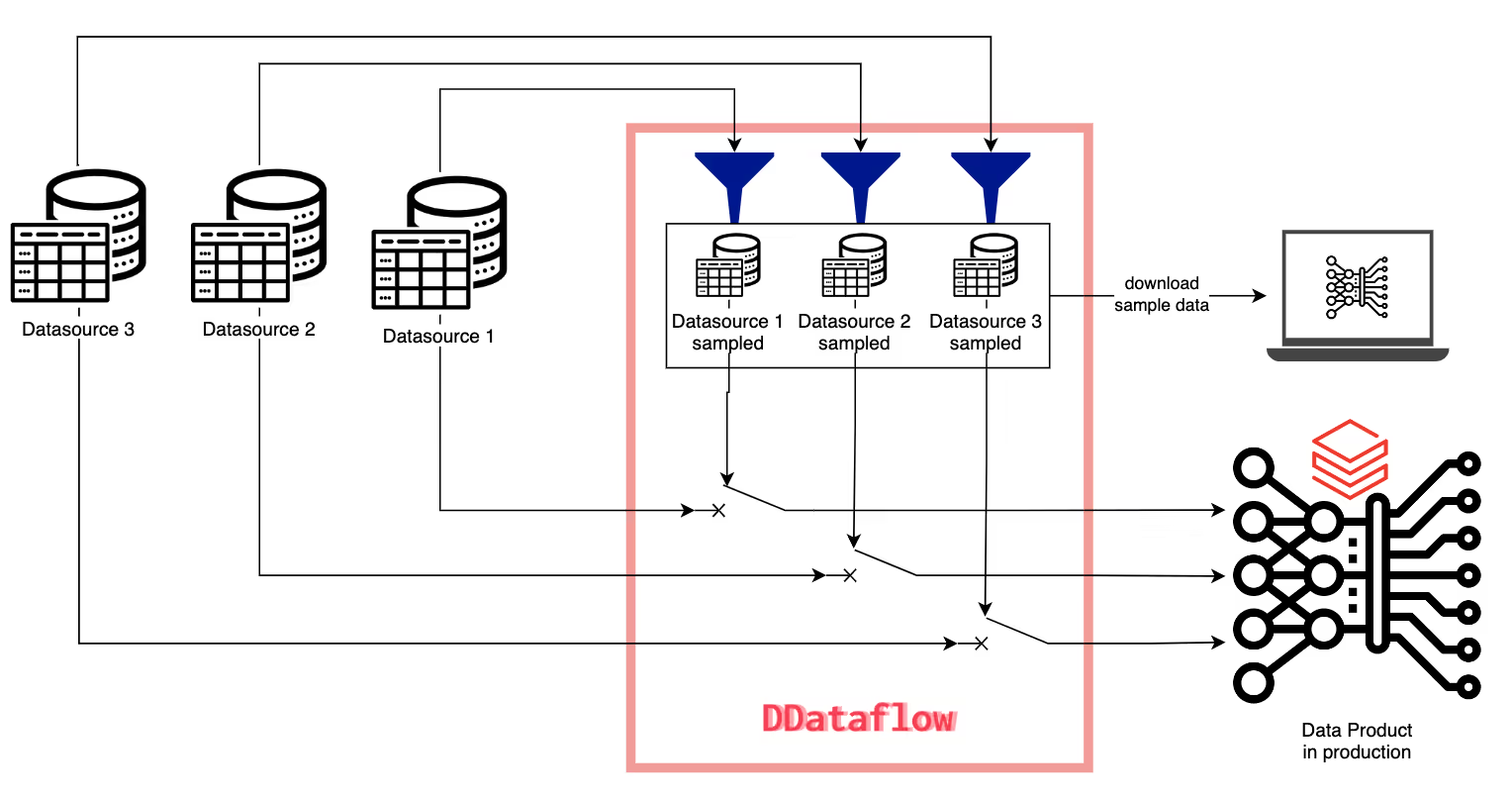

It quickly became obvious that the core bottleneck that makes it slow is the data. So we decided to sample the data down to something that can run faster and with fewer resources.

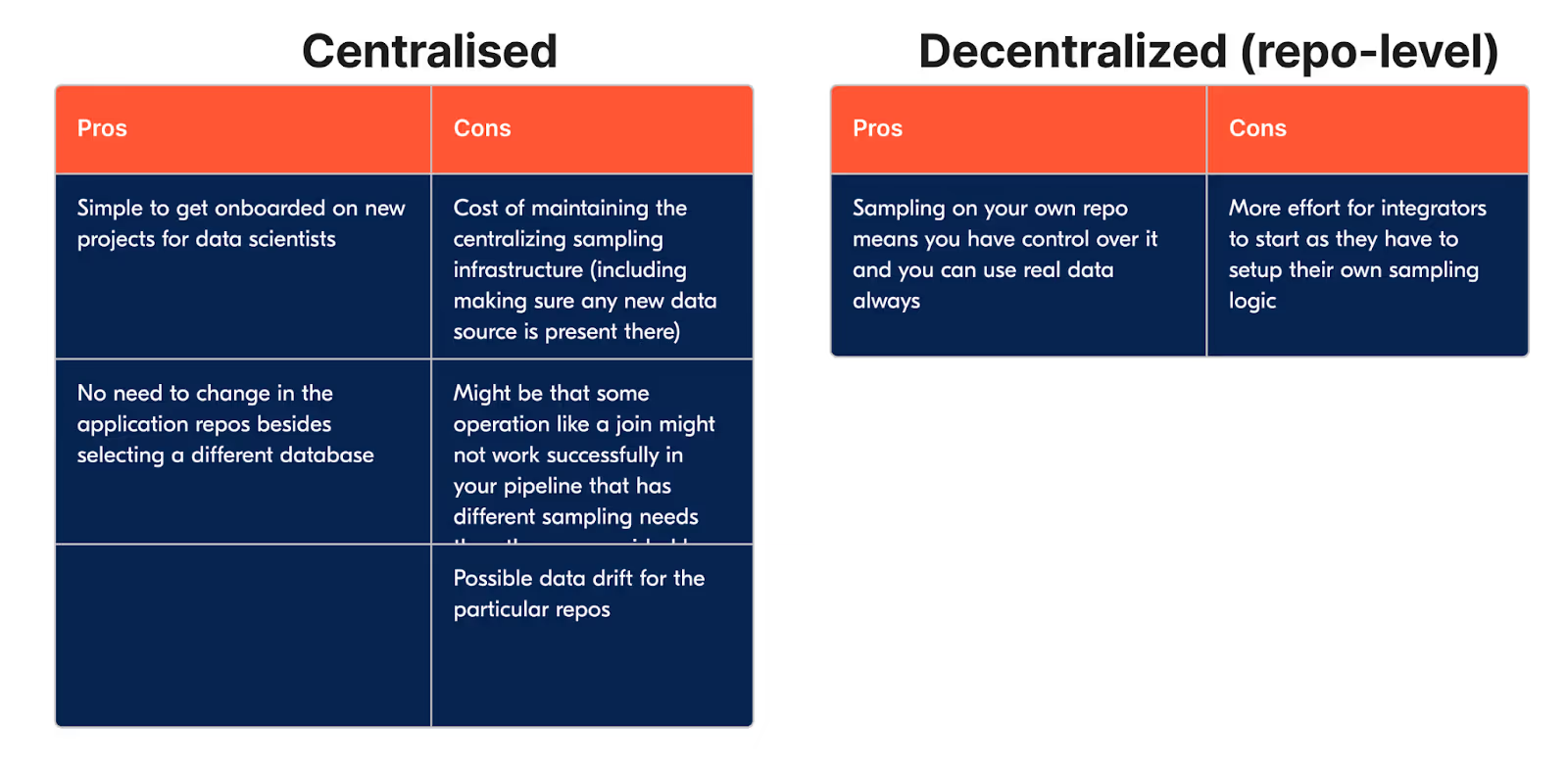

Once we opted for sampling, the next question to ask was if we should either centralize or decentralize the sampling logic. We could centralize sampling by creating a testing data warehouse database or run it decentralized with the sampling specific for each project. And as with everything in software engineering, it's about tradeoffs.

After talking about the tradeoffs, we opted for the decentralized option. One of the central considerations is that it scales better by being self-service rather than having a centralized place to control everything. Now let's dive in into the design of the tool.

Design

The interface was designed to maximize the productivity of data science teams. We've opted for a central, type-checked configuration-as-code definition of data sources. To use it looks like the following:

You can then define sample functions per dataset which simply spark filters over the original production dataset. You can easily extract the filter callables to functions and libraries that can be abstracted away. You can then read your data in the following way:

You can replace your application code anyplace you need a sampled dataframe by calling ddtaflow.source().

Or by replacing sql statements table names by ddataflow.name :

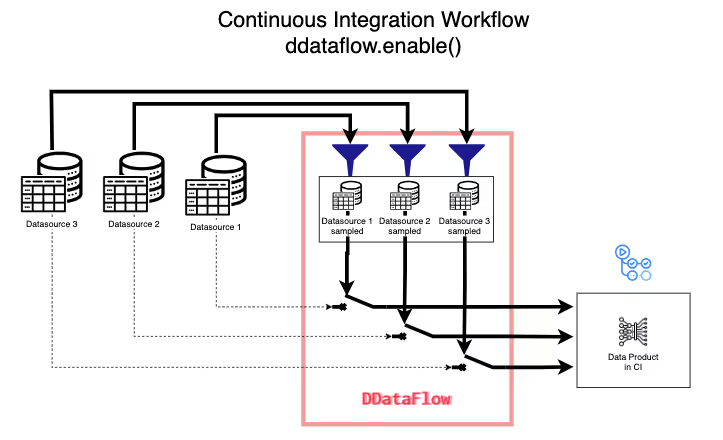

To turn on and off the sampling, you can directly do so in the code:

Or leaving the control to your environment via environment variables, useful for enabling it in the CI without changing the code.

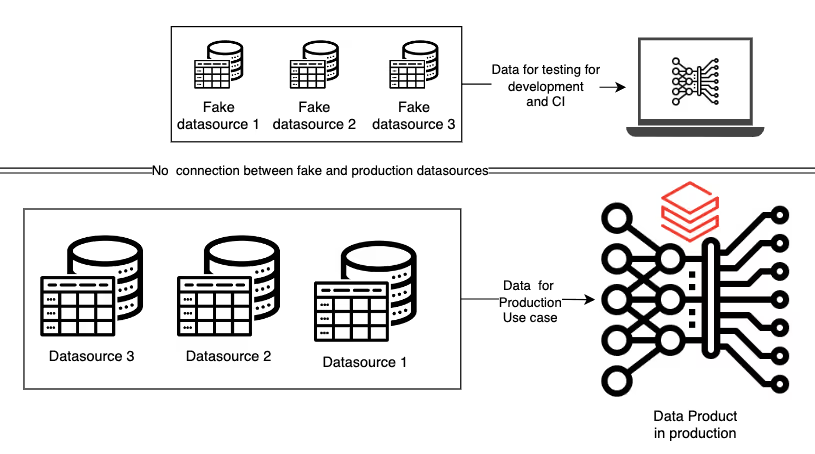

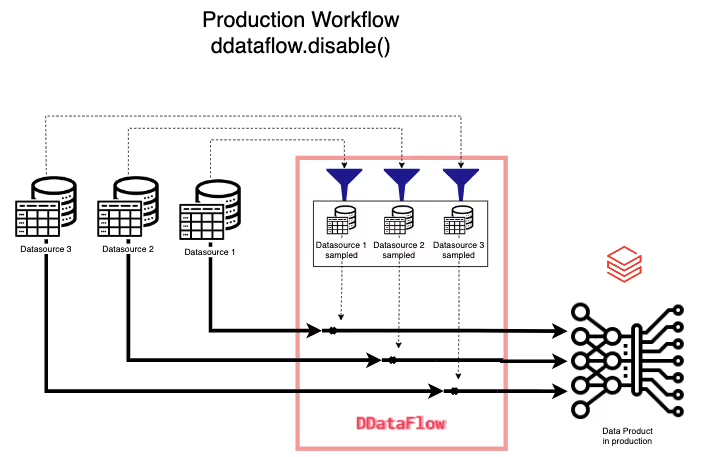

In the CI, we enable DDataflow and in production, we don't, which means your data is not sampled. That is the core workflow of DDataflow.

One other feature we added, which was very simple to do given the design, is to allow for offline development by downing the samples into your local machine.

We started this project in our monorepo for ML (mltools), given that its creation was quite exploratory and we wanted to not think about its distribution in the beginning. After it was clear we would use it, we decided to make it open-source and refactored it to its own repo.

A success story

We first validated the idea by presenting it around and creating a prototype. We then integrated it into our ranking service, getting quick feedback and making adjustments during the integration. If you want to read more about how we leverage the tool in ranking, you can check this blog post. We also ran some workshops to make sure the team could operate DDataflow. Our data-products team is very collaborative, and it was quickly caught by the other teams.

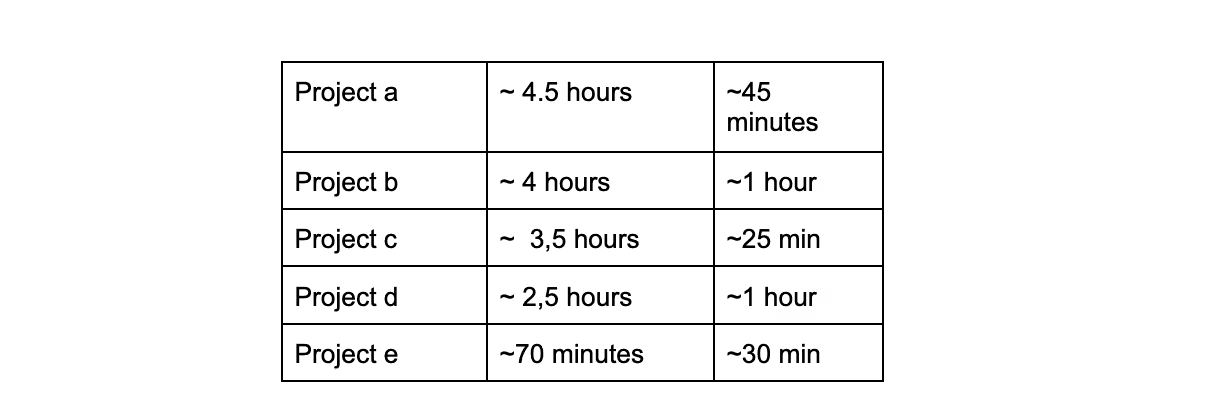

DDataflow is now being used on a majority of our production data products. To give a sense of the impact numerically, here is a table with some of our core integrations and the time in the CI to run the project before and after DDataflow.

With further sampling strategies, we are confident this time could be further optimized. Time saved in the CI is not the only factor that adding these tests improves, though. The fact that we can now ensure our pipeline is running on every change means that we detect errors that otherwise would go unnoticed until the next time it runs (usually the next day or week). Many other projects not on this list only started adding the pipelines to the CI after having the reasonable option to do it fast via DDataflow.

This tool creation process exemplifies well how we think about developing tools for our machine-learning platform and how we managed to be successful with a small set of contributors.

We focus on solving particular problems in a scalable self-service way with small tools and relying on the abstractions provided by the rest of the organization rather than trying to solve all problems with generic all-encompassing solutions. This approach also enables us to be closer to the data science problem and develop further insights on the data-science needs rather than being simple infrastructure providers.

We hope that this tool can help the community to build more reliable testable machine-learning pipelines in the future. We are also excited about possible next steps around automatic sampling strategies.

Shoutout

I want to say a special thank you to Theo for the collaboration through this project and the amazing diagrams for DDataflow. To Mathieu, Prateek, and Steven for contributions to this post. And for the data products team for quickly adopting the tool.

.JPG)

.JPG)